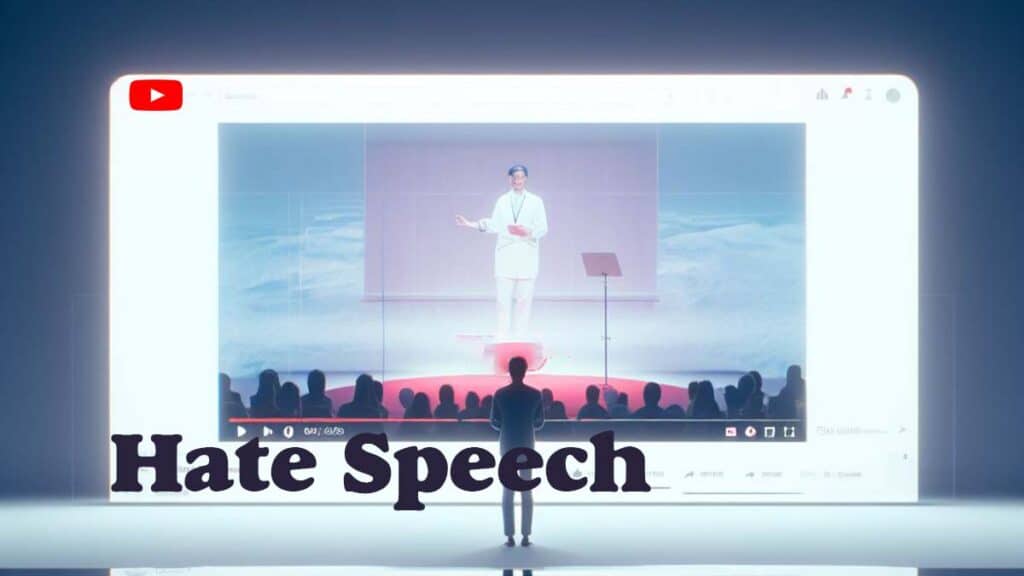

YouTube is a popular video-sharing platform that allows users to upload, view and share videos. It is one of the most visited websites in the world, with more than 2 billion active users. But YouTube’s role in spreading hate speech has also been criticised.

There are many ways that YouTube contributes to hate speech. One way is with its algorithm. The YouTube system is designed to recommend videos to users who may be interested in. However, this system can also increase hate speech, as it is more likely to recommend videos to users who have seen hate other language videos already.

Another way YouTube supports hate speech is through its budget. YouTubers can publish their videos through ads. But this gives filmmakers an incentive to make hate speech documentaries, as they are more likely to attract viewers and therefore more money

YouTube’s comments sections can also be a breeding ground for hate speech. This is because users can post anonymous comments on any video, regardless of whether they created the video or not.

Finally, YouTube’s live streaming feature can also be used to broadcast hate speech in real time. It can be difficult for YouTube to handle a real-time live stream, which means hate speech can spread quickly on the platform.

Here are some examples of how YouTube has contributed to hate speech in the past.

- In 2019, YouTube was criticized for allowing white supremacist content to remain on its platform. This content includes Holocaust denial videos promoting violence against minorities.

- In 2020, YouTube was criticized for allowing QAnon’s conspiracy theories to air on its platform. These conspiracy theories are linked to actual violence.

- In 2021, YouTube was criticized for allowing anti-vaccine content on its platform. These trends have been associated with decreased vaccination rates and increased incidence of preventable diseases.

YouTube has taken steps to address the problem of hate speech on its platform. For example, it revamped its policies to ban certain forms of hate speech and invested in more monitors. However, hate speech is still a problem on YouTube, and it is important to understand how the platform can contribute to this problem.

- Here are some steps that can be taken to prevent YouTube from contributing to hate speech.

- YouTube needs to improve its algorithm to prevent it from promoting hate speech content.

- YouTube needs to do more to prevent creators from monetizing hate speech content.

- YouTube needs to do a better job of using comment sections and live streams.

- Users are required to report any hate speech they see.

- Users should support creators of quality and inclusive content.

- Users need to learn more about different cultures and perspectives to challenge their own biases.

By taking these steps, we can help make YouTube a safer and more inclusive place.